🌐 Paper Copilot and the Push for Transparent Peer Review in AI/ML Research

-

️ By CSPaper.org, based on the position paper "The Artificial Intelligence and Machine Learning Community Should Adopt a More Transparent and Regulated Peer Review Process" (Jing Yang, ICML 2025)

️ By CSPaper.org, based on the position paper "The Artificial Intelligence and Machine Learning Community Should Adopt a More Transparent and Regulated Peer Review Process" (Jing Yang, ICML 2025)

As submission numbers to top AI and ML conferences exceed 10,000 annually, the peer review system is under unprecedented strain. In response, a growing movement advocates for a more transparent, participatory, and regulated approach to peer review — anchored by tools like Paper Copilot, a community-driven analytics platform that aggregates and visualizes review process data from conferences such as ICLR, NeurIPS, CVPR, and ICML.

This article unpacks the findings from the ICML 2025 position paper authored by Jing Yang, which leverages two years of insights from Paper Copilot, and outlines a compelling case for open and structured review systems in AI/ML.

🧭 What is Paper Copilot?

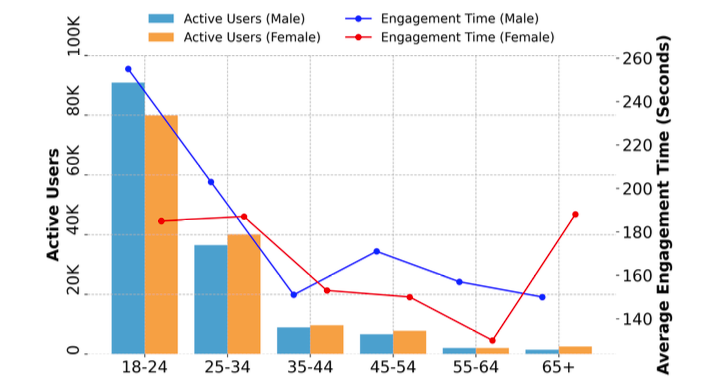

Paper Copilot is an independently developed platform designed to democratize access to peer review metrics. Built by a PhD student without institutional backing, it has reached 200,000+ active users from 177 countries, especially early-career researchers aged 18–34.

Core Features:

Core Features:- Community-submitted and API-collected review scores, confidence levels, and discussion logs.

- Visualizations of review timelines, score distributions, and author statistics.

- Interactive analysis of conference-level engagement, user demographics, and score evolution over time.

Global user distribution map

Engagement by age/gender

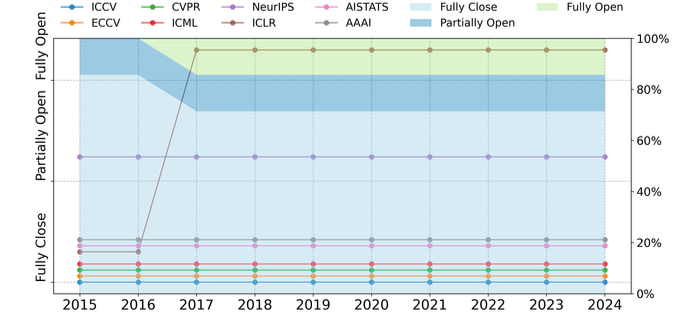

Review Models: Fully Open, Partially Open, and Closed

Review Models: Fully Open, Partially Open, and ClosedThe paper categorizes conferences into three disclosure modes:

- Fully Open (e.g., ICLR): All reviews and discussions visible from the start.

- Partially Open (e.g., NeurIPS): Reviews released post-decision.

- Fully Closed (e.g., ICML, CVPR): No public review content at any stage.

Review disclosure preferences across conferences and yearsDespite the rise of platforms like OpenReview, many conferences still opt for closed or partially open settings, often due to concerns about reviewer anonymity, misuse of ideas, or company IP protection.

Community Engagement and Evidence of Demand

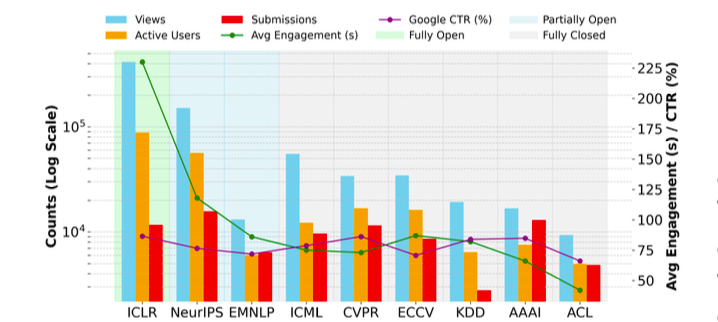

Community Engagement and Evidence of DemandThe paper uses traffic analytics to validate the appetite for transparency:

- Organic Search Dominance: 59.9% of traffic comes from search engines—researchers are actively seeking peer review statistics.

- User Behavior: Conferences with open review modes (like ICLR) see 4–6x more engagement (views, active users, session duration) than closed ones.

Views, engagement time, CTR by review model

🧠 Benefits of Fully Open Reviews

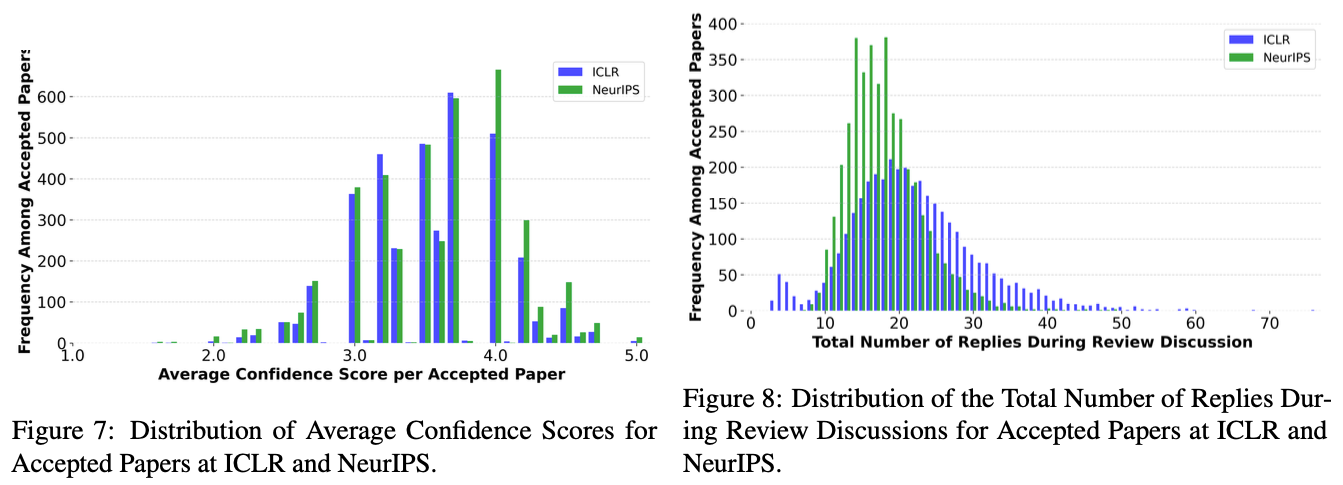

The paper documents several benefits tied to open reviewing:

- Increased Discussion Depth: ICLR features broader and more active discussion threads than NeurIPS or ICML, with some threads reaching over 70 replies.

- Mitigated Reviewer Overconfidence: Public exposure leads to more careful, measured reviews — confidence scores are more balanced in open settings.

- Transparent Dialogue: Real-time visibility facilitates constructive debate and reproducibility.

Challenges with Closed Review Systems

Challenges with Closed Review SystemsThe paper identifies systemic flaws in closed reviews:

- Inexperienced Reviewers: Younger researchers (aged 18–24) are often overburdened without training, leading to uneven review quality.

- AI-Generated Reviews: The opaque nature of closed systems makes it difficult to detect LLM-generated or plagiarized content.

- Authorship Inconsistencies: Name changes post-acceptance have gone untracked, highlighting accountability gaps.

Community Speaks: Survey Results

Community Speaks: Survey ResultsA user survey on Paper Copilot revealed that 57% of respondents would willingly share their review scores even for closed-review venues like CVPR. This indicates a clear grassroots demand for transparency across conference formats and subfields.

🧭 Addressing Concerns: Balancing Openness and Protection

While supporting transparency, the paper acknowledges valid counterarguments:

- Plagiarism Risks: Open submissions might expose novel ideas prematurely.

- IP Concerns for Industry: Open preprints can jeopardize patents in "first-to-file" jurisdictions like the U.S.

- Reviewer Reluctance: Public visibility may discourage bold, critical feedback.

The authors suggest that default transparency with opt-out protections, especially for industrial or high-risk research, offers a feasible compromise.

🧾 Conclusion

This position paper doesn't merely propose transparency for its own sake. It provides a data-backed argument showing that transparent peer review:

- Encourages richer academic discourse,

- Reduces opacity and potential misconduct,

- Empowers early-career researchers,

- Aligns with community-driven values of open science.

As AI/ML continues to scale, the research community must evolve its review mechanisms accordingly — embracing openness not just as a feature, but as a foundational norm.