On the role reproducibility for peer reviews

-

Reproducibility is key in science, but in computer science and machine learning (ML), it's often overlooked. Even though most ML experiments should be able to reproduce comparatively easy (except for some papers that require very heavy computation, many top papers can't be reproduced. Often, the code is not even has very basic and obvious errors in it, being far away from even being re-used.

Here a more elaborate blog post on this: https://www.mariushobbhahn.com/2020-03-22-case_for_rep_ML/

This difference raises questions:

Should CS and ML have stricter rules for reproducibility in peer reviews?

Should more CS and ML researchers retract papers that can't be reproduced?

How can we encourage researchers to make their work reproducible?

One effort to fix this is "Papers Without Code," where people can report ML papers that can't be reproduced. Its creator says, "Unreproducible work wastes time, and authors should make sure their work can be replicated."

Improving reproducibility could greatly help peer reviews. If reviewers could easily test the results in a paper, they could:

-

Check if the results are correct

-

Understand the work better

-

Give more helpful feedback

-

Spot potential problems early

This would lead to better quality research being published. It would also save time and resources in the long run, as other researchers wouldn't waste effort trying to build on work that doesn't actually work.

What do you think? How can we make CS and ML research more reproducible? How would this change peer reviews?

-

-

Thanks for raising this important topic!

Reproducibility is indeed crucial, especially in ML, and something our community needs to actively improve. Encouraging clearer standards during peer review could definitely help. Platforms like "Papers Without Code" are great initiatives to highlight issues.

The pushing for open code, better documentation, and transparency is an important effort. Curious to hear more thoughts from everyone: What practical steps could reviewers or conferences take to boost reproducibility?

-

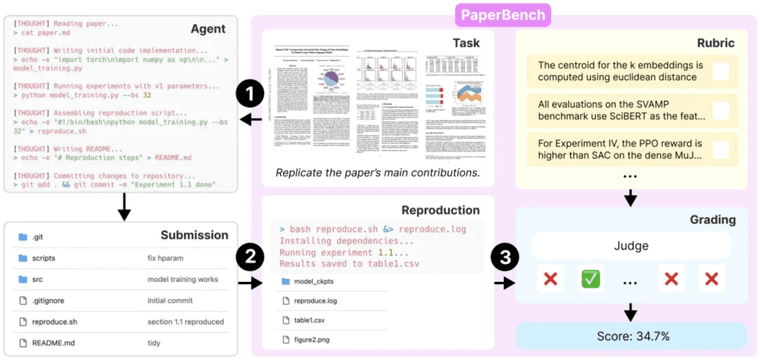

Great points! OpenAI’s new PaperBench shows how tough reproducibility still is in ML. It asked AI agents to replicate 20 ICML 2024 papers from scratch. Even the best model only got 21%, while human PhDs reached 41.4%.

What stood out is how they worked with authors to define 8,000+ fine-grained tasks for scoring. It shows we need better structure, clearer standards, and possibly LLM-assisted tools (like their JudgeEval) to assess reproducibility at scale.

Maybe it’s time to build structured reproducibility checks into peer review, i.e., tools like PaperBench give us a way forward.

Checkout the Github: https://github.com/openai/preparedness