Can LLMs Provide Useful Feedback on Research Papers?

-

Summary of Findings from Stanford’s Large-Scale Empirical Study (arXiv:2310.01783)

This study investigates whether large language models (LLMs), specifically GPT-4, can generate useful scientific feedback on research papers. Using thousands of papers from Nature journals and ICLR, and a user study with 308 researchers, the authors assess both the effectiveness and limitations of LLM-generated reviews.

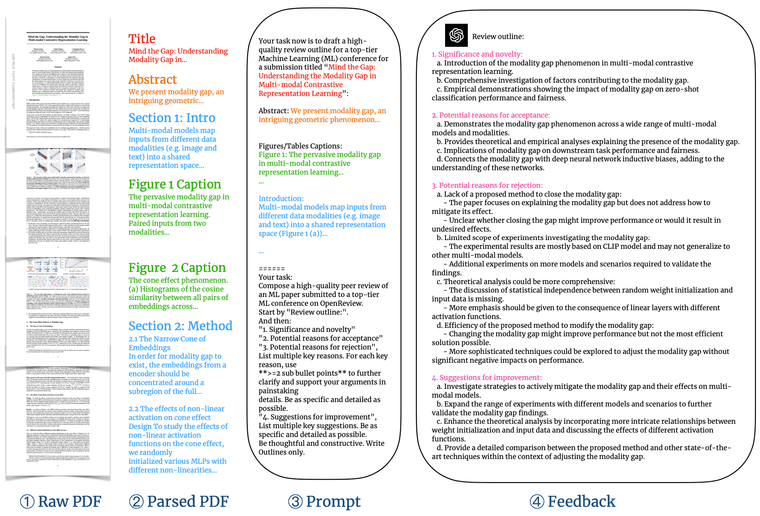

Schematic of the LLM scientific feedback generation system

Key Findings

Key Findings1. LLM Feedback Shows High Overlap with Human Reviews

- On Nature papers: 30.85% of GPT-4 comments overlapped with human reviewer comments.

- On ICLR papers: 39.23% overlap, comparable to human-human overlap (35.25%).

- Overlap increases for weaker papers (up to 47.09% for rejected submissions).

2. Feedback Is Paper-Specific, Not Generic

- Shuffling LLM comments across papers led to overlap dropping to <1%.

- Proves that GPT-4’s comments are tailored, not template-like.

3. LLM Captures Major Issues

- GPT-4 is more likely to identify concerns mentioned by multiple reviewers.

- Also prioritizes issues mentioned earlier in human reviews (likely more important ones).

4. Different Focus Areas from Humans

- GPT-4 over-indexes on:

- Implications of research (7.3× more than humans)

- Requests for experiments on more datasets

- Under-indexes on:

- Novelty (10.7× less likely than humans)

- Ablation experiments

- Suggests LLM + human reviews are complementary.

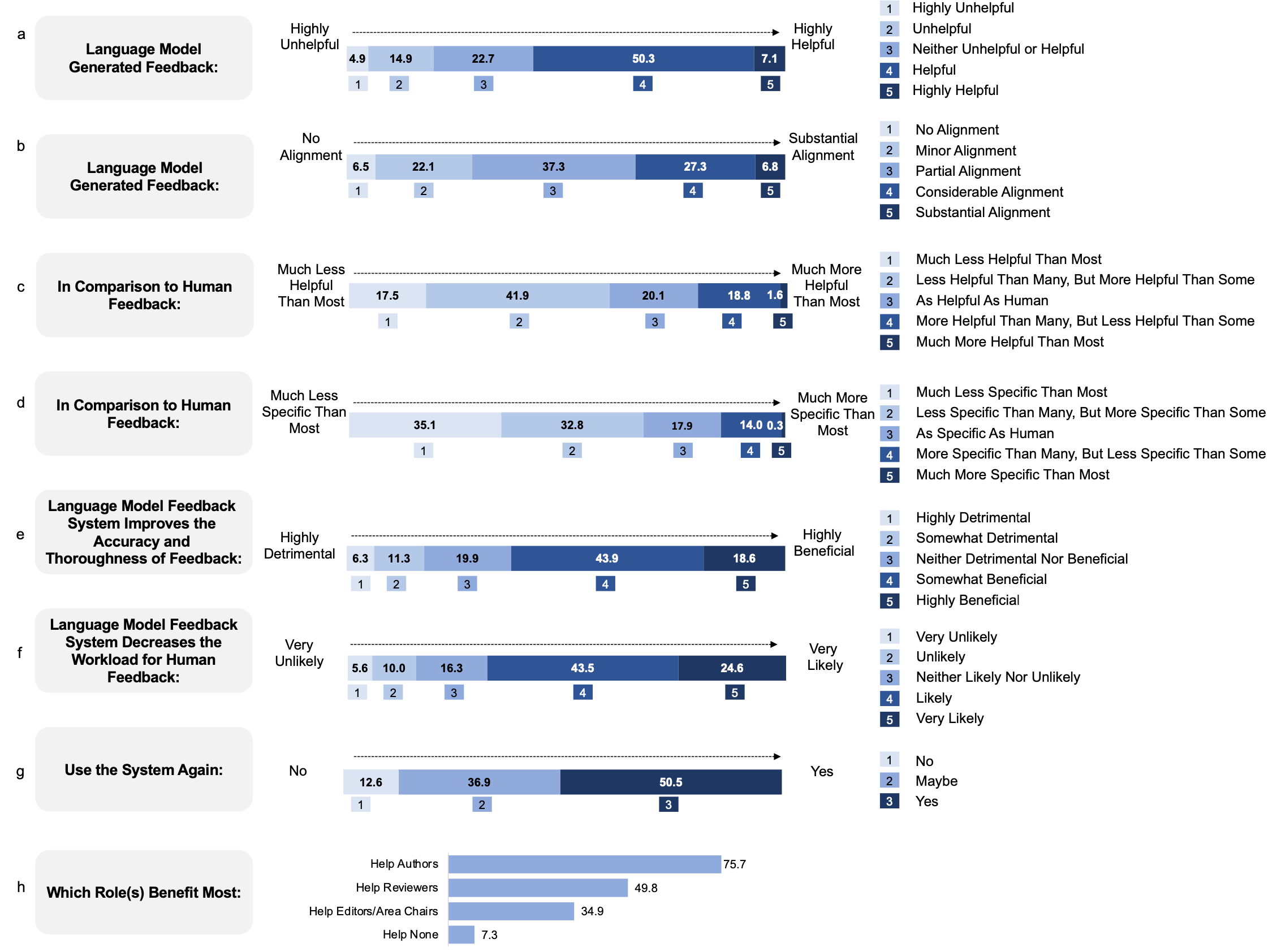

🧪 Prospective User Study (n = 308)

- 57.4%: Found GPT-4 feedback helpful or very helpful.

- 82.4%: Said it’s better than at least some human reviewers.

- 65.3%: Said GPT-4 pointed out issues that human reviewers missed.

- 50.5%: Would use the GPT-4 system again.

Human study of LLM and human review feedback“The review took five minutes and was of reasonably high quality. This could tremendously help authors polish their submissions.” — User Feedback

️ Limitations

️ Limitations- Lacks deep technical critique (e.g., model design, architecture flaws).

- Sometimes too vague or generic.

- Cannot handle visuals like graphs or math formulas.

- Should not be used as a replacement for human expert reviews.

Final Takeaways

Final TakeawaysGPT-4 can augment the scientific review process by offering fast, consistent, and often insightful feedback, especially for early drafts or under-resourced researchers.

But it cannot replace human judgment. The future lies in human-AI collaboration for scientific peer review.

Code & Data: GitHub Repository

Code & Data: GitHub Repository

Authors: Weixin Liang et al., Stanford University

Authors: Weixin Liang et al., Stanford University

Paper: arXiv:2310.01783

Paper: arXiv:2310.01783